Populating the Lakehouse: Lessons learned from On Prem to the Lakehouse with Microsoft Fabric in the retail industry

Microsoft Fabric has the potential to create a big impact in the BI landscape with its integrated approach and the promise of being able to see a complete data ecosystem in one cohesive and inclusive platform. To get there, we need to start where the cloud modern technology meets with the realities of our on-premise databases. In this blog article we explore some learnings we had when going through this process.

Microsoft Fabric has the potential to create a big impact in the BI landscape with its integrated approach and the promise of being able to see a complete data ecosystem in one cohesive and inclusive platform. To get there, we need to start where the cloud modern technology meets with the realities of our on-premise databases. In this blog article we explore some learnings we had when going through this process.

One word of caution, please note that MS Fabric is going through some rapid changes as we move from preview to general availability in the following weeks, so a number of things we describe here, may change in the near future. Also, please note that our experience may be biased by the previous usage of the predecessor tools in Azure, namely, Data Factory and Synapse Analytics.

To us, one of the most appealing aspects of Fabric is the underlying One Lake, the idea that Microsoft took a bold step and declared Delta the ruler format to store data to then do efficient data exploitation. Over the last few years, we have been seeing a steady increase in the adoption of Parquet and its cousin Delta in the data platforms as one way of storing data efficiently and inexpensively, however it was mainly offered as a valid alternative, rather than being “the way” to store data. So, I was interested to see how this would be adopted in reality in Fabric and for the sake of this article, its initial storage.

Lesson 1: The Power BI ecosystem is declared victorious

In most places where there were different views on how to do things between the Azure tools vs Power BI, the Power Bi flavor was chosen.

The component of MS Fabric involved with gathering data from on-premise sources is Data Factory (Fabric edition). The first thing that came to mind was how would we achieve connectivity to on-prem and given how Azure Data Factory connects to on-premise, how to install its intermediate component, the “Integration Runtime”, as is turns-out everything in Fabric is really close to the Power BI way experience and way of doing things, so products have changed from their previous implementations to favor the Power BI way. So, to connect to on-premise, the Data Gateway has to be used.

Also, once you connect with a Data Flow to gather the data, the way to connect to the source objects and to define filters and the like is really close to the Power BI and Power Query experience. Changing from using the old and tried way of inputting queries to do your extractions and using the more graphical way for this was a little shocking, I always believed that the other way while it is more technically challenging, could allow you to have more control over what exactly you want to happen, i.e. where are filters applied, sending hints to the source database, using subqueries.

Lesson 2: Less choices are better

With MS Fabric, Microsoft seems to have said: “If we’re going to have a unified all-encompassing data ecosystem, we can’t have people doing things any way they want”. The biggest example of this is the fact that everything is now stored in delta format in the One Lake (or Delta Lake).

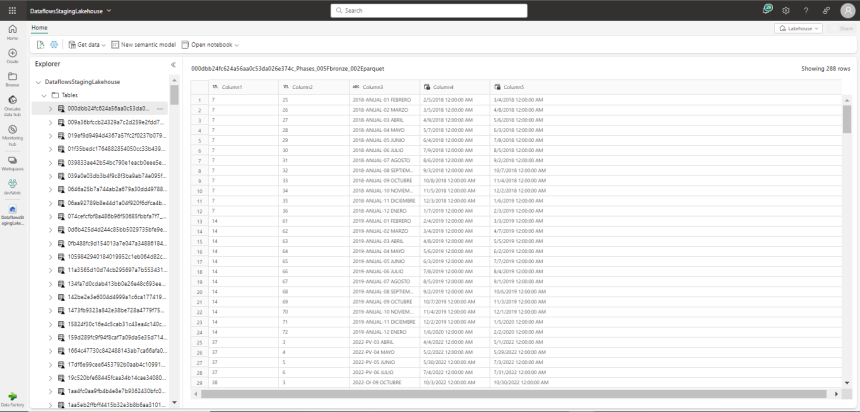

Also, the suggested way to store information in general but more importantly in the Lakehouse is following the Medallion architecture, so Bronze for raw and staging data, Silver for more refined data and Gold for things such as finished business KPIs. So, no more philosophical debates over how to store information in a data lake, at least in Fabric it should be done using this pattern.

One last aspect we want to highlight for the focus of this blog piece is the fact that if you’re bringing your data into the Bronze layer, Fabric will create an intermediate folder where it will store all the Parquet files it needs to bring data into MS Fabric and give it a unified view. It won’t tell you about its existence or ask about how these Parquet files should be managed, but it will be there underlying the solution.

Lesson 3: Not all features and perks are available … yet

As MS Fabric evolves, some features are being brought into the tool and some aren’t available yet.

Focusing on bringing data from other source systems, we feel that one of the most powerful features of Azure Data Factory is Metadata Copy, where you define some meta-data, point to your source system and you can let Azure Data Factory do a large portion of the heavy lifting associated with managing deltas, adding or removing tables that have to be read. This is mainly achieved via pipelines that can access a plethora of data sources, including on-prem databases.

In MS Fabric, for now the Data Sources that you can use in Pipelines are more limited that what you can bring in with Data Flows, as of this writing there are only four databases that are supported. This translates into a larger effort to bring massive amounts of tables and data than what you can achieve with the Azure set of tools and the nice and clever usage of pipelines to create nice cycles of data copy activities, whether using the meta-data copy or your own version.

Also, this may mean that some things that you implement today could benefit from some optimization as more features become available.

Lesson 4: Change is the only constant, but lots of promise

Lots of things are changing in the MS Fabric ecosystem, we have seen things managed differently and subtle changes to the UI in the last month, given the current pace, we believe that most features are mature enough to start implementing some projects.

Bringing data today from on-premise to the Lakehouse may require a little more work than we’d like, but the ability to have everything in a single ecosystem that from our point of view relies on winning paradigms (Delta Lake, Medallion Architecture) is well worth the extra effort to get ahead of the curve.